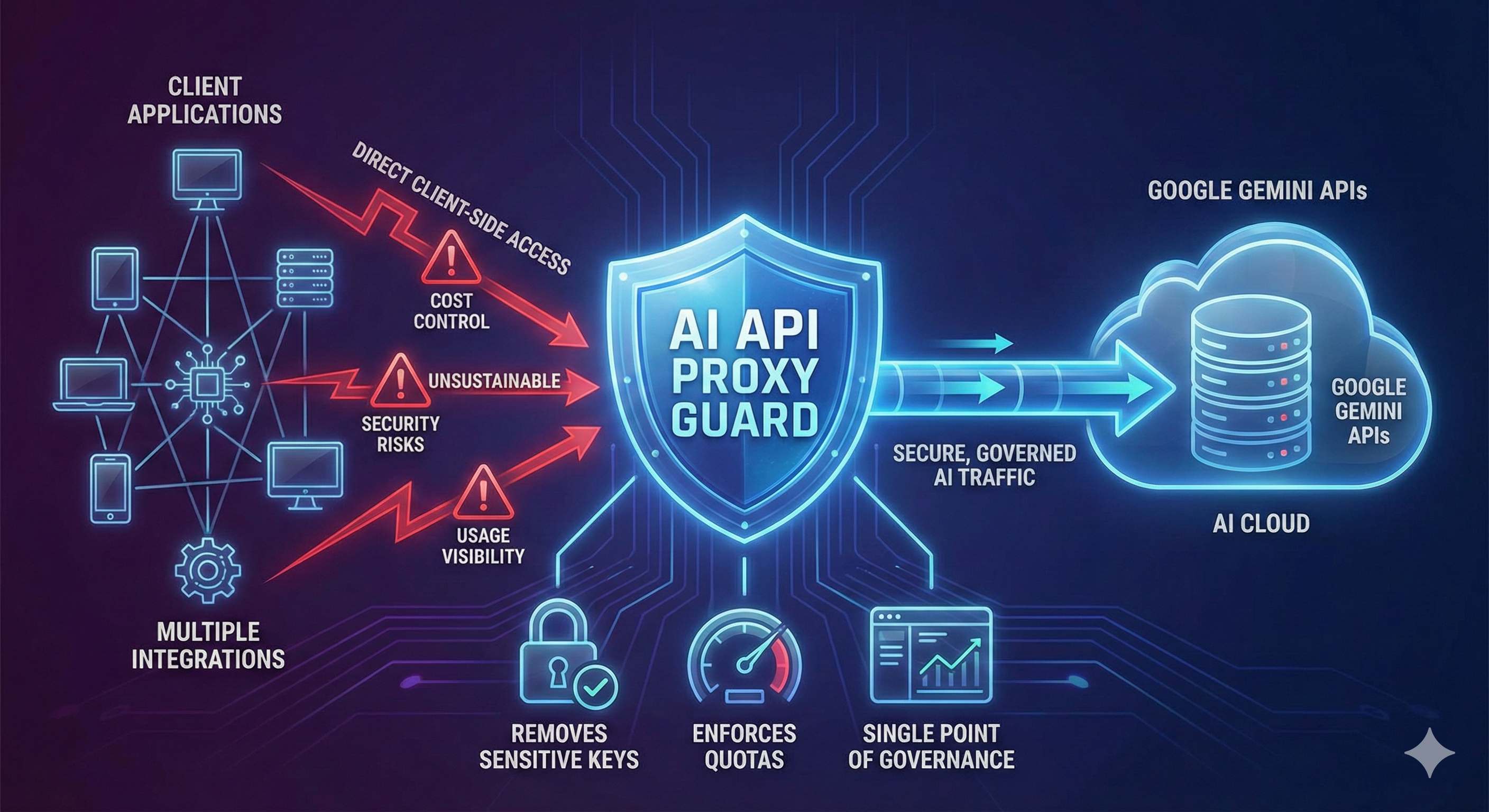

AI-powered features introduce new operational risks, particularly around cost control, security, and usage visibility. As multiple applications began integrating Gemini models, it became clear that direct client-side API access was unsustainable in a production environment. AI API Proxy Guard was built as a central control layer to sit between applications and Google's Gemini APIs. It enforces quotas, removes sensitive keys from the frontend, and provides a single point of governance for all AI traffic.

Identified operational risks with direct Gemini API access. Analysed cost patterns and security requirements across multiple applications.

Architected proxy layer with quota management, key abstraction, and centralised logging. Defined API contracts for client integrations.

Built Node.js backend service with Cloud Run deployment. Implemented rate limiting, request validation, and Gemini API forwarding.

Load testing for scalability verification. Security audit for credential handling and API endpoint protection.

Deployed to Google Cloud Run with monitoring dashboards. Migrated frontend applications to use proxy endpoints.

Ongoing cost analysis, quota tuning, and performance improvements based on usage patterns and client feedback.

Proxy Guard deployed to production. All frontend applications migrated to use centralised API endpoints.

Cloud Monitoring dashboards configured with cost alerts and usage quotas. Rate limiting policies implemented.

Core proxy architecture completed. Node.js service deployed to Cloud Run with auto-scaling configuration.

Project initiated after identifying cost and security risks with direct client-side Gemini API access.

Let's discuss how we can create a customised solution for your specific needs.

Get in Touch